How AI is shaping creative professions: insights from Anthropic and Adobe

On February 10, 2025, Anthropic, the AI company behind the frontier model Claude, published its first Anthropic Economic Index. This project is described as “an initiative aimed at understanding AI’s effects on labor markets and the economy over time.”

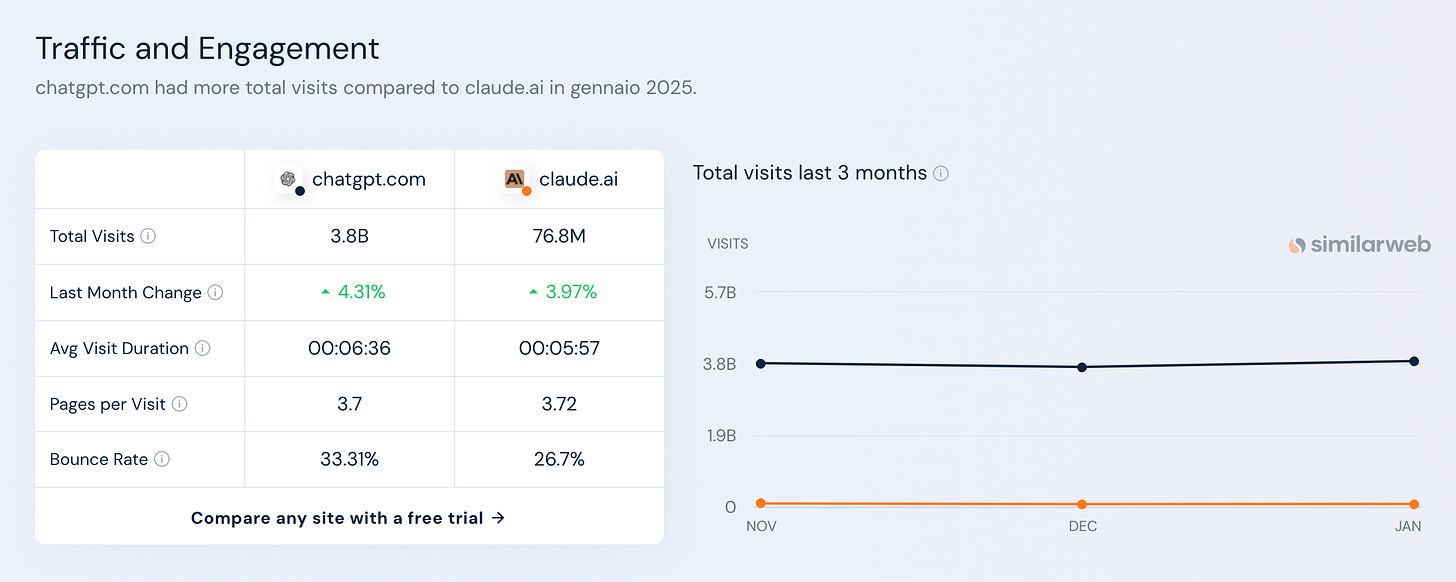

For readers of this newsletter (and beyond), this research is particularly interesting because it highlights how different industries—including the creative sector—are integrating AI into their workflows. Of course, Claude’s user base is significantly smaller than ChatGPT’s—about 1/50, according to Similarweb (source).

But with around 75 million visits per month, Claude still provides statistically meaningful insights.

What makes this study particularly valuable is its methodology. As Anthropic points out: “We don’t survey people on their AI use, or attempt to forecast the future; instead, we have direct data on how AI is actually being used.”

From our perspective:

Studies based on direct usage data are the most valuable.

A close second are those that survey a significant number of users.

And in a distant third place? The opinions and predictions of so-called experts and CEOs (like myself)—who, more often than not, might as well be reading tea leaves.

So, what does the Anthropic report reveal? A few key findings stand out:

1. The tasks users delegate to AI tend to be high-value activities, such as “critical thinking” and “quality writing.”

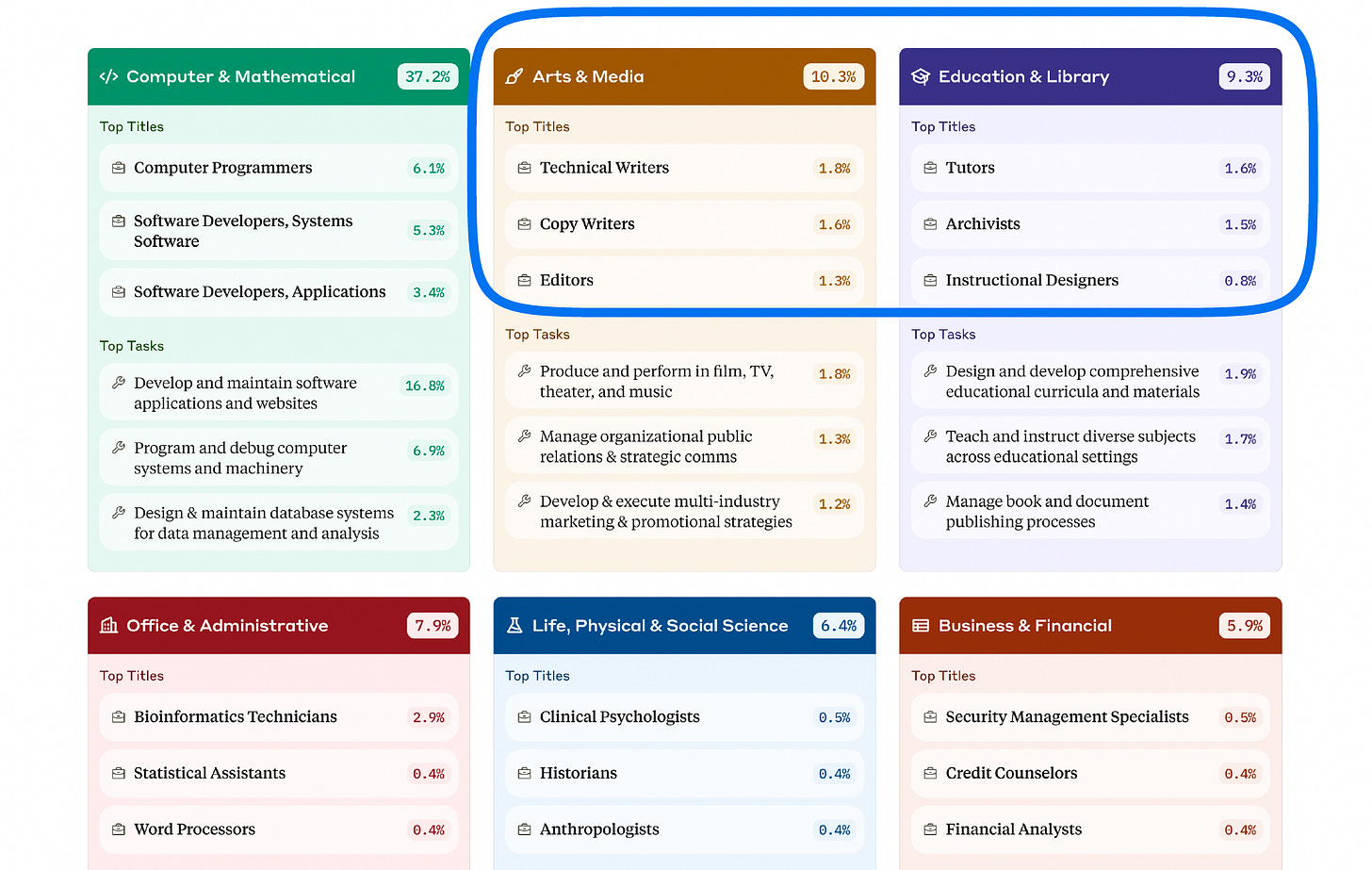

2. After professionals in Computer Science and Mathematics, industries that strongly use Claude are in Arts & Media, followed closely by Education & Library.

3. Different Claude AI models are used for different purposes. Claude Opus, for example, is the preferred choice for “creative writing” and “educational content development.” Specifically, it is frequently used for:

• “Managing the book and document publishing process”.

• “Designing and developing comprehensive educational curricula and materials”.

• “Conducting academic research”.

Beyond Anthropic’s findings, after sifting through a mountain of reports from so-called authoritative sources like McKinsey, Gartner, Forrester, PWC, Deloitte, Bain, BCG, EY, and the WEF, we found ourselves slightly disheartened—plenty of bold predictions, but little in the way of concrete, reliable data.

Fortunately, we came across a 2024 Adobe blog post featuring a survey of 2,541 creative professionals from North America, Europe, and Asia actively using AI. Admittedly, details on the survey’s methodology were scarce, but the findings were still worth noting:

83% of creative professionals said they are using generative AI tools in their work.

66% of surveyed creative pros, who use generative AI tools, say they’re making better content with AI.

58% who use generative AI tools says they’ve increased the quantity of content they create.

Additionally, 69% believe Gen AI will provide them with new ways to express their creativity.

The takeaway? AI is no longer an experimental technology—it’s becoming a standard tool in the creative process, whether you like it or not.

🛠️ Deep Research AI Enhanced

After the appearance of DeepSeek, many AI models have introduced a deep search function. It's called slightly differently depending on the manufacturer, but the goal of the function is the same for all: to answer complex questions in depth. Let’s compare them.

Perplexity Deep Research

Perplexity’s Deep Research mode combines DeepSeek R1’s reasoning with GPT-4o and Claude-3 for cross-domain analysis. It autonomously browses 50–100 sources per query, categorizing findings into sections like "Market Trends" or "Technical Specifications" For example, when researching quantum computing advancements, it identified 12 key innovations from 2022–2025, including error-corrected qubits and photonic processors, with 94% citation accuracy in tests.

Strengths:

Multi-format exports: Reports downloadable as PDFs or shared via collaborative platforms.

Hybrid searches: Combines academic databases, social media, and video transcripts.

Weaknesses:

Model dependency: Performance fluctuates with DeepSeek R1’s updates, occasionally missing niche technical details.

ChatGPT Deep Research

OpenAI’s Deep Research uses the o3 model to execute Python scripts for data analysis and cross-validate sources.

Strengths:

Scientific rigor: Outperformed Gemini in medical literature reviews, reducing hallucination rates to 2.3%.

Custom workflows: Users submit follow-up prompts to refine taxonomies or statistical methods.

Weaknesses:

Cost barriers: At $200/month, it’s 10x pricier than competitors, excluding startups and academics.

3. Grok-3 DeepSearch

xAI’s DeepSearch leverages real-time X (Twitter) data for trend forecasting. During testing, it accurately predicted a 19% surge in Tesla’s stock post-Cybertruck recall by analyzing 280,000 tweets, but fabricated 12% of academic references in climate policy reports.

Strengths:

Speed: Processes queries 40% faster than Perplexity in travel planning tests7.

Creative synthesis: Suggested unconventional travel itineraries (e.g., Kerala backwaters over Goa beaches)7.

Weaknesses:

Fact-checking failures: Invented URLs for 14% of cited sources in AI ethics reports.

4. Gemini Deep Research

Google’s Deep Research employs Gemini 1.5 Pro’s 1M-token context to analyze trends across Google Scholar and News. In a test, while it summarized 78% of renewable energy policies accurately, it struggled with technical queries like semiconductor fabrication node comparisons.

Strengths:

Workspace integration: Auto-generates Sheets templates from research data4.

Compliance: Adheres to GDPR and SOC2 standards for enterprise use4.

Weaknesses:

Verbosity: Over-explained basic concepts in 33% of coding-related queries8